From Output to Outcome: Why Defining Outcomes in Data & AI Products Is So Challenging

And why GenAI makes it even harder

In this 7th edition of this newsletter, we will deep dive into the ever-challenging task of defining an outcome, especially when it comes to Data & AI Products. If you haven’t done it yet, we encourage you to read our previous editions, especially the latest one on “AI & Product: Two Development Cycles to Unify” and the first one that explains the Data Death Cycle.

Happy reading, and we welcome your feedback.

Let's face it—we're all guilty of the data world's equivalent of "Look, I made a thing!" We build beautiful algorithms and pristine dashboards, then proudly plant our flag and declare victory. Mission accomplished! Except... is it really?

Spoiler alert: it's not.

If you've ever spent six weeks crafting the perfect analytical tool only to watch it gather dust in a forgotten company server folder—congrats! You were likely focused on output rather than outcome.

And you’re not alone. We've built a culture where shipping stuff gets applause, while real change gets lost in the shuffle.

Data & AI teams often work in project mode, not with a product mindset. The difference lies in what you're optimizing for.

A project mindset focuses on output—the deliverable. A product mindset is driven by outcome.

But what exactly is an outcome in Data & AI Products? And why is it so tricky to define? That’s what this article explores.

The Million-Dollar Formula Nobody Told You About

One challenge in Data & AI is that we often confuse the asset—transformed data, algorithm, KPI—with the user’s actual experience.

Here's the secret sauce:

Outcome = (Asset: Data or AI) × (User Experience)

Let’s break it down: your forecasting algorithm (the asset) multiplied by how people use it (the experience) equals real-world impact (the outcome).

If either side of the equation is zero, you know the result…

This isn't just poetic math—it’s the difference between digital paperweights and business-changing solutions. Your LLM might be top-tier, but if the UI needs a PhD to operate? Zero impact. Your pipeline might process terabytes, but if no one uses it? Digital tumbleweeds.

Put simply:

Output: a dashboard interface or chatbot UI

Asset: forecasting algorithm, LLM, pipeline, or similarity engine

The outcome isn’t either of those alone—it’s the combination of asset and user experience.

From "I Made a Thing" to "We Solved A Problem"

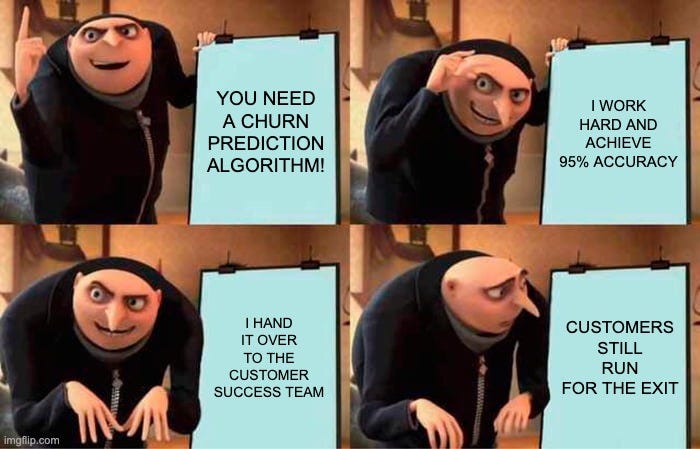

Picture this: your boss asks for a churn prediction model. You deliver a model with 95% accuracy. High-fives!

But three months later, churn hasn't changed. What happened? You delivered the output (a working model) but missed the outcome (reduced churn).

The truth is painfully simple: nobody cares about your fancy algorithm. Nobody lies awake at night dreaming about your perfectly normalized database. They want to double conversions or slash acquisition costs.

Your asset is just a means to an end—and we've collectively forgotten the end part.

Outcome Starts with the Job to Be Done

Here’s where it gets real. Every user has a "Job To Be Done"—a problem to solve or a goal to reach.

Your Data & AI Product either helps them do that better, faster, cheaper... or it doesn't.

When you hit the JTBD sweet spot, magic happens:

Outcome → User Impact (they're willing to pay for) → Business Value

This distinction is key. If users find the journey intuitive, they may convert more easily. If it’s truly useful, they may upgrade or stick around.

If people would pay for your tool because it makes work easier, you’ve nailed it. If they’d rather do taxes than use it? Back to the drawing board.

Real-World Example: No More Out-of-Stock in Garden Furniture

Let's explore a concrete example: meet Sarah, a category manager for garden furniture, who is living her own personal retail nightmare. Every summer, just when customers are ready to drop serious cash on patio sets, her inventory hits zero.

Sarah's JTBD is painfully clear: "Never miss a sale because we ran out of garden furniture."

To tackle this challenge, we might build an algorithm that forecasts demand using sales velocity, weather, and seasonality. That’s the asset.

But then comes the experience layer, where the real magic happens. Here, we can imagine several levels:

Level 1: A static dashboard showing current inventory levels. Sarah still has to check it daily, interpret the data, and manually place orders when things look dicey. It's like giving someone a thermometer when they have a fever—helpful for diagnosis, useless for treatment.

Level 2: A Slack notification that dings when inventory drops below 10%. Now we're talking! Sarah gets alerts like "⚠️ Patio umbrella stock critical! Only 5 units left with 7-day average sales of 2/day." It's reactive, but at least proactive about being reactive.

Level 3: An intelligent system that not only alerts Sarah but suggests: "🔔 Adirondack chairs running low! Based on weather forecast (heat wave coming next week) and seasonal patterns, we recommend ordering 50 more units. We've drafted the PO for Supplier X who can deliver fastest. Approve with one click?" THIS is solving Sarah's actual problem!

Level 4: A system that notices the stock running low and automatically initiates reorders within pre-approved parameters, or even identifies and sources alternatives from other suppliers or marketplaces. Sarah's job has fundamentally transformed from "inventory firefighter" to "inventory strategist."

Same core asset, different experiences—very different outcomes. Level 1 works. Level 4 solves Sarah’s problem.

As products evolve, so should the output—to bring us closer to Sarah’s true JTBD: never miss a sale due to stockouts.

The GenAI Plot Twist: When You Don't Even Know What Problem You're Solving

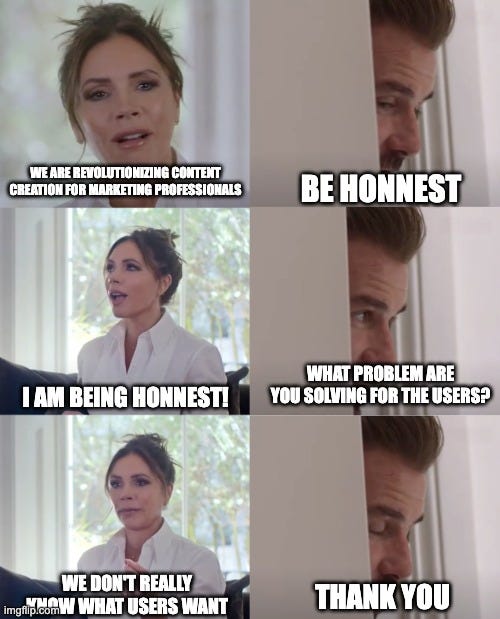

GenAI adds new complexity.

Traditional Data & AI Products enhance workflows: faster processes, better decisions, less manual work. GenAI?

GenAI redefines workflows entirely.

Manual tasks may now be fully automated. The challenge? The Job To Be Done is usually unclear at the start.

Think back to ChatGPT’s launch. OpenAI said: "Here’s a language model in a chat interface. Have fun!" No fixed JTBD. Just an open playground.

They optimized for learning—not perfection.

What followed was an outcome-discovery masterclass:

Writers used it to overcome writer's block

Programmers used it to debug code

Students used it to explain complex concepts

Marketers used it to generate campaign ideas

Family members used it to write passive-aggressive emails to their homeowners' association :)

Even OpenAI didn’t foresee all use cases. Instead, they observed and improved both the asset (less hallucination, plugins) and the experience (doc uploads, memory).

In GenAI, "ship and learn" is key. The JTBD emerges through usage, not upfront specifications.

Even today, there are trade-offs: some users prioritize accuracy, others creativity. Some want reliable summaries; others want imaginative storytelling.

Same product, wildly different expectations.

The Subjective Outcome Problem: One Product, Many Truths

Even with a clear goal, users define success differently. This variability creates one of the most fundamental challenges in defining outcomes: context and subjectivity.

Take our own example. We (Anne-Claire and Yoann) are working together on our upcoming book on Crafting Impactful Data & AI Products. We tasked ourselves to generate the first cover of the book.

Here are our generated covers:

Same product, same goal. One image. Two people. Multiple iterations. Very different outputs.

Why? Different preferences and interpretations of the JTBD—hence, different outcomes.

It’s not just taste—it’s about what each person sees as success.

And therein lies the ultimate outcome challenge: subjectivity. Unlike the clean binary world of "the dashboard loaded in under 2 seconds" (an output metric), outcomes live in the messy human realm of context, emotion, and perception.

Four Steps To Become Outcome-Oriented

So what's a data professional to do in this brave new world of outcome-oriented thinking? Here's your battle plan:

Start with the JTBD, not the solution. Before writing a single line of code, understand what your user is really trying to accomplish. Hint: it's rarely "view a dashboard" or "interact with an AI."

Co-create with users throughout. Don't disappear for three months and return with a "perfect" solution. Build alongside users, letting their feedback shape the product in real-time.

Measure what matters, not what's easy. Clicks and views are comfortingly quantifiable but ultimately meaningless. Did the garden furniture stay in stock? Did sales increase? Did the manager spend less time firefighting inventory issues?

Embrace evolution over perfection. Start with a minimum viable product that creates some value, then iterate based on real-world usage. ChatGPT didn't launch with plugins, memory, or web browsing—those came later as user needs emerged.

Nobody builds a shrine for Version 2.3 of the Analytics Report. But they might name a room after the person who helped reduce churn by half.

So ask yourself: are you making things—or making things happen?

Because in the end, your impressive output is just expensive digital furniture unless it drives the outcomes that actually matter.

And you? What do you see as the biggest challenge in defining outcomes for AI-driven products?